The Quest For Artificial Intelligence

A Visual Timeline

Antiquity

Artificial life has been in the imagination of people since the dawn of civilization. In Greek mythology, The Iliad of Homer speaks of self-propelled chairs called tripods, and of "golden attendants". Pygmalion of Cyprus carved Galatea, an ivory statue that was brought to life by Venus. In Asia, the mechanical engineer Yan Shi presented King Mu of Zhou with life-size automata that were able to walk, move their head, and even sing.

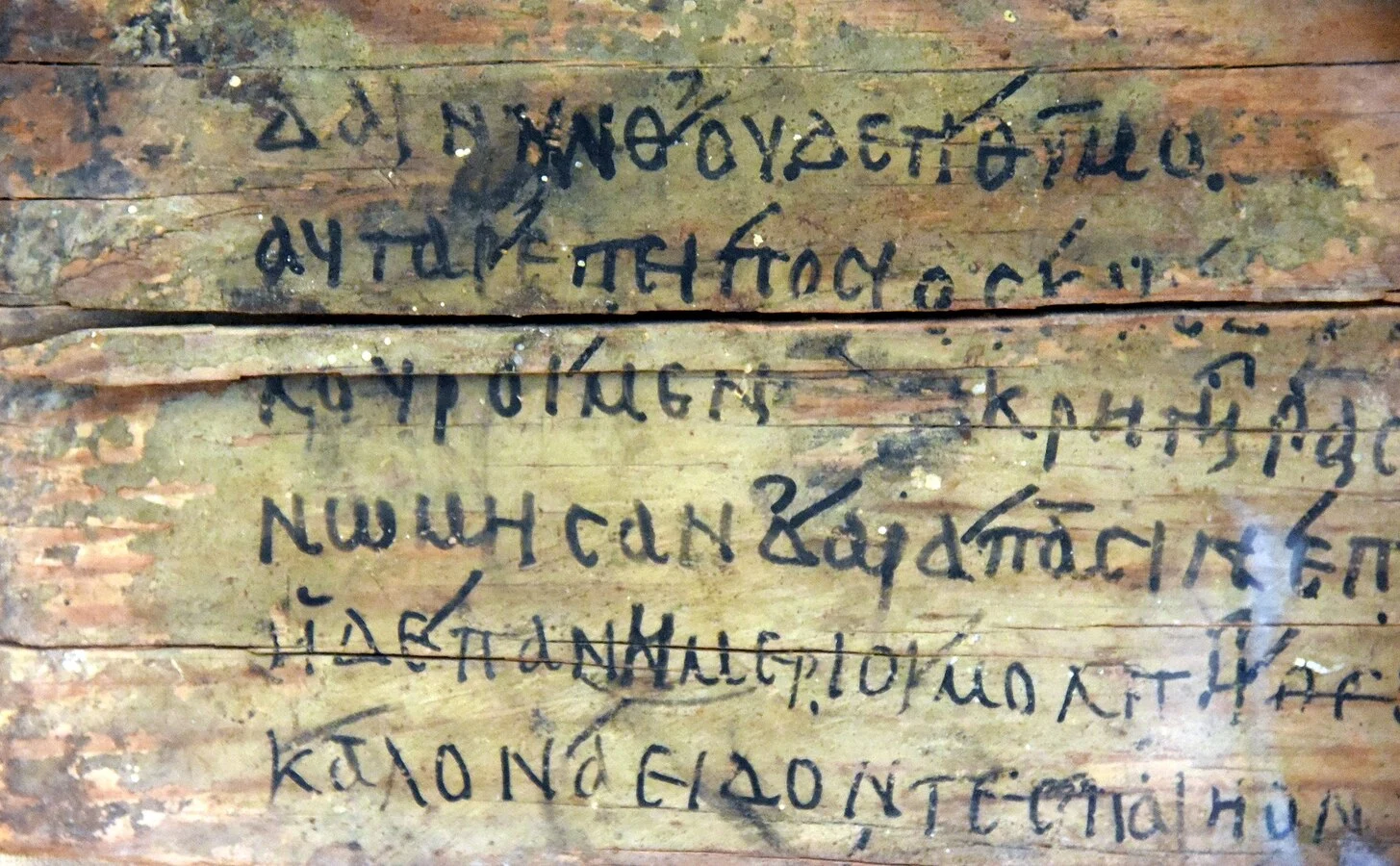

350 BC

The Greek philosopher Aristotle tries to analyze and codify the process of logical reasoning in his book Prior Analytics. He identifies a specific type of reasoning, the syllogism, with this famous example:

- All humans are mortal.

- Socrates is a man.

- Therefore, Socrates is mortal.

270 BC

Greek inventor and barber Ctesibius improves the water clock by adding a system that keeps the water level constant. A floating cork attached to a rod turns on the incoming water flow when the level is too low, and turns it off when the level is too high, making it the world's first self-regulatory feedback system.

780-850

Muḥammad ibn Mūsā al-Khwārizmī writes books containing systematic solutions for arithmetic and algebra, granting him the title "father of algebra". The term algebra itself comes from the title of his book, al-jabr, and even the word algorithm is derived from his name.

1623

Wilhelm Schickard creates a machine that can perform arithmetic calculations. It is capable of addition, subtraction, multiplication, and division.

1646-1716

Gottfried Wilhelm Leibniz is well-known for having co-invented calculus, but he also worked on mechanizing reasoning. He attempted to design a language in which all human knowledge could be formulated, and envisioned a future where logical problems could be solved by performing calculations on this language.

1702-1761

Thomas Bayes (1702-1761) formulates Bayes' rule, probably the most important result in probability theory. Nearly all reasoning and decision making takes places in the presence of uncertainty, making it essential for artificial intelligence as well.

1738

French inventor Jacques de Vaucanson displays his Digesting Duck, an automaton in the form of a duck which can quack, flap its wings, paddle, drink water, and eat and "digest" grain.

1770

Wolfgang von Kempelen constructs The Mechanical Turk, a chess-playing automaton capable of playing strong chess games. While never proven during the 84 years the machine toured various countries, it was actually a hoax. A human chess player was hidden within the construction and performed the moves.

1745-1804

A few years after he created the Digesting Duck, Jacques de Vaucanson lays the foundation of an automated loom using punch cards.

The design gets perfected in 1804 by Joseph Marie Jacquard. The Jacquard machine is a device fitted to a loom that simplifies the process of manufacturing textiles with complex patterns. The machine revolutionizes the weaving industry and inspires the development of Charles Babbage's Analytical Engine.

1805

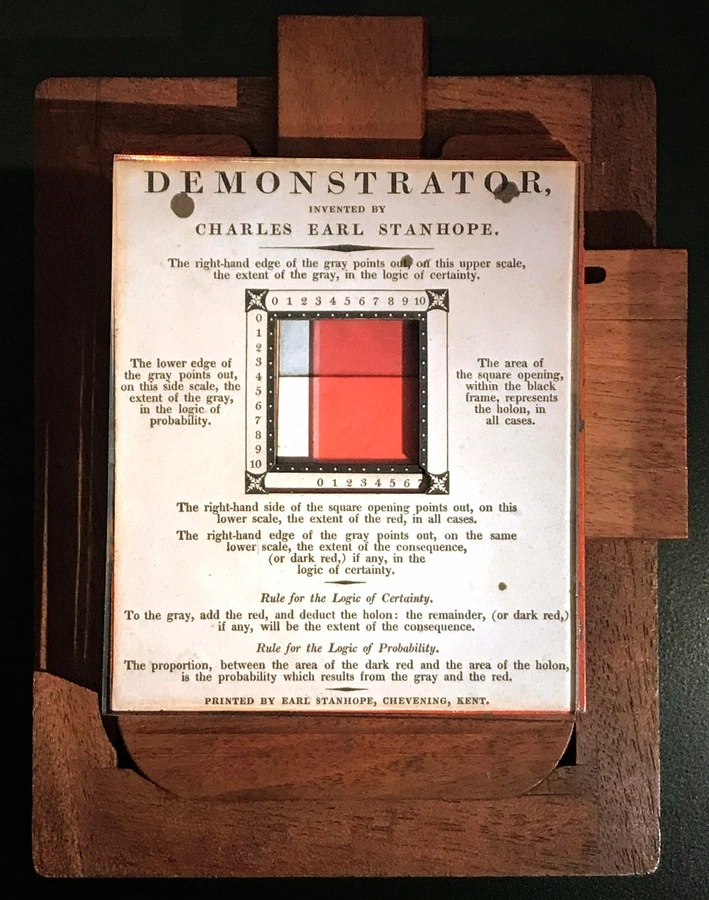

The British scientist and politician Charles Stanhope experiments with devices for solving simple logic problems. The Stanhope Demonstrator is able to calculate certain numerical syllogisms, essentially making it the world's first analog computer. An example problem it can solve is the following:

- Eight of ten A's are B's

- Four of ten A's are C's

- Therefore, at least two B's are C's

Where the numbers are programmable using sliders. Stanhope's work was only made public sixty years after his death.

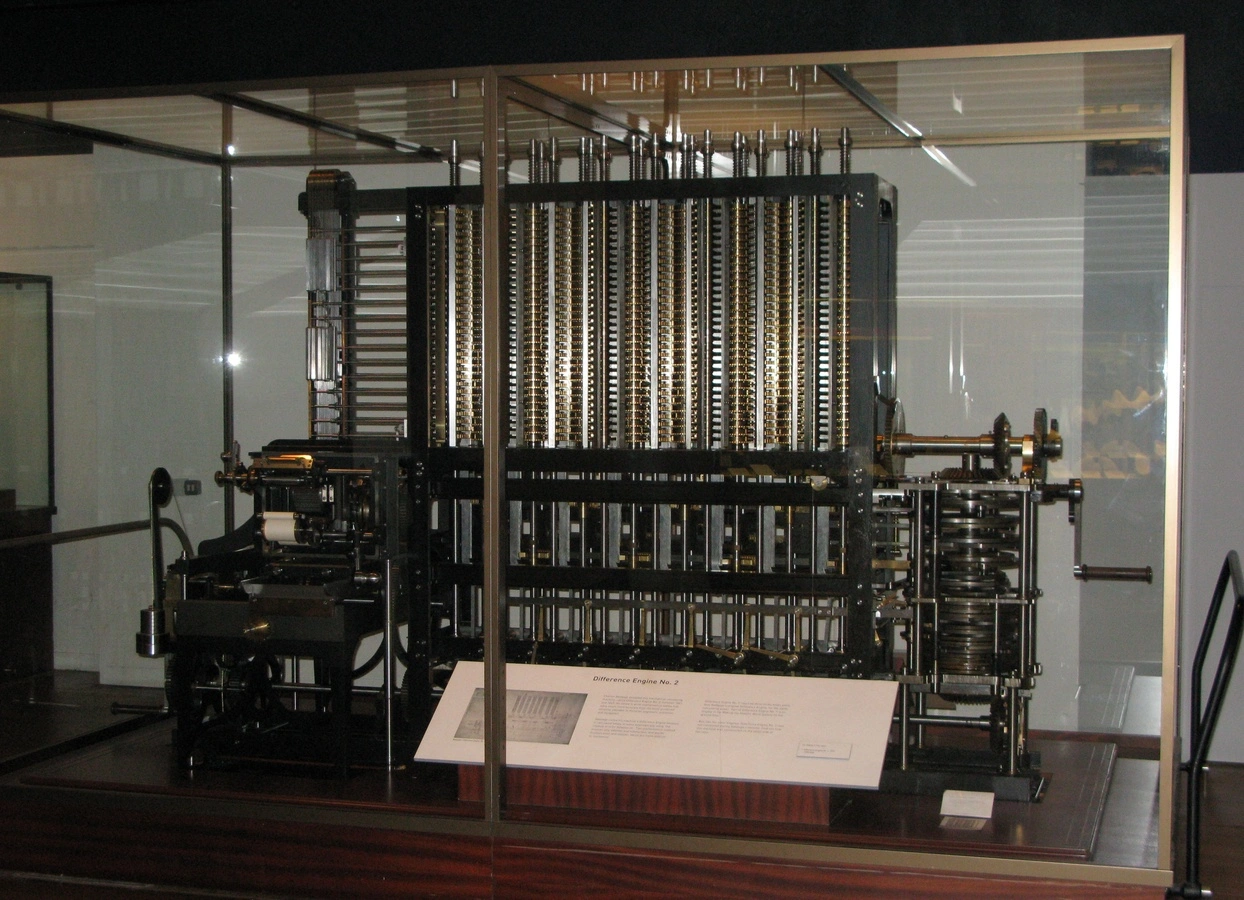

1822

Charles Babbage invents the "Difference Engine", a machine that is able to compute values of polynomial functions. The image shows a replica constructed in 1991.

Later, Babbage worked on a device he called the "Analytical Engine". The machine would implement most ideas needed for general computation. The Analytical Engine was never actually constructed due to lack of funding.

1848

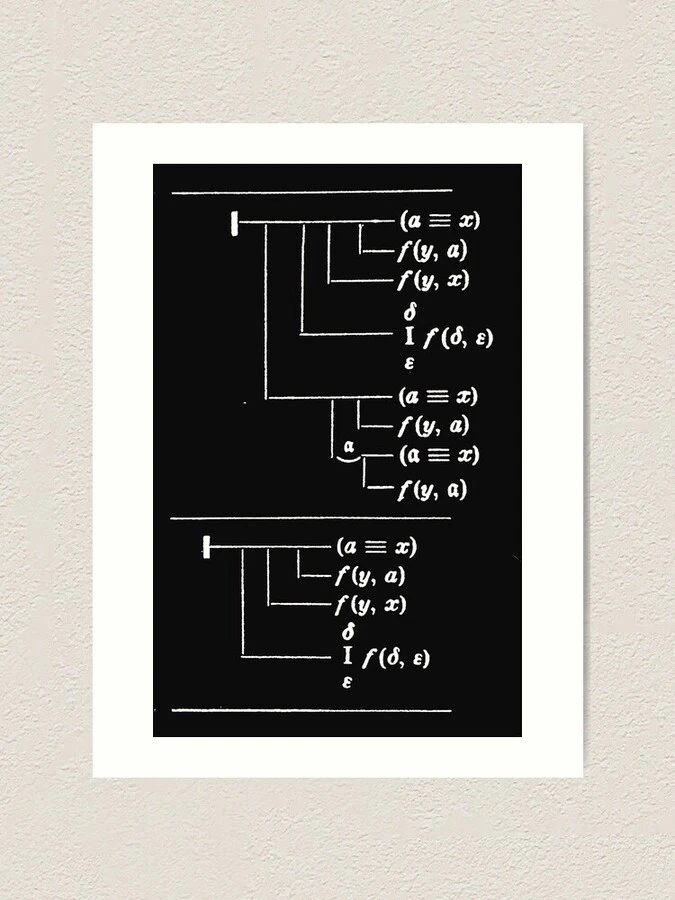

The German logician Gottlob Frege invents Begriffsschrift a system in which propositions can be written in their graphical form. This system is a forerunner of predicate calculus, an important tool in mechanized reasoning.

1854

George Boole publishes the book An Investigation of the Laws of Thought on Which Are Founded the Mathematical Theories of Logic and Probabilities, wherein he defines Boolean algebra. His work shows that logical reasoning can be performed by manipulating equations representing logical propositions.

1873-1930

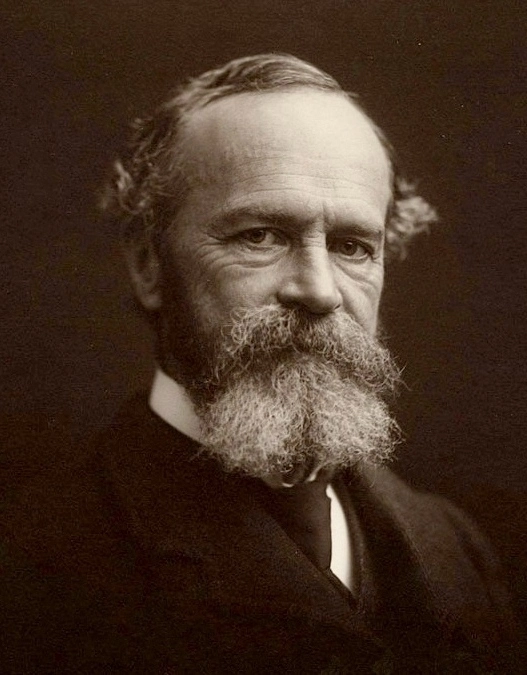

Serious scientific study into the human mind begins in Germany with Wilhelm Wundt (1832-1920) and in America with William James(1842-1910). Some time later, B.F. Skinner (1904-1990) researches the idea of a reinforcing stimulus.

1930s

Logicians form the foundations of the theory of computation. Alonzo Church describes a class of computable functions, which he calls recursive. Alan Turing describes an imagined "logical computing machine", which is now called a Turing machine. The Church-Turing thesis claims that these two notions are equivalent.

1937

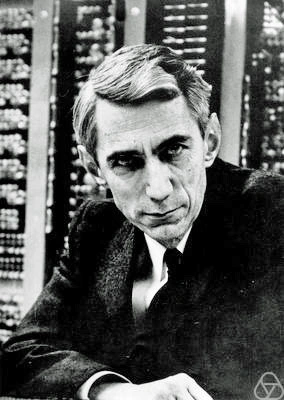

In his master's thesis, Claude Shannon shows that Boolean algebra and binary arithmetic can be used to simplify telephone switching circuits. He also shows that switching circuits can be used to implement Boolean logic, an important concept in computer design.

1941

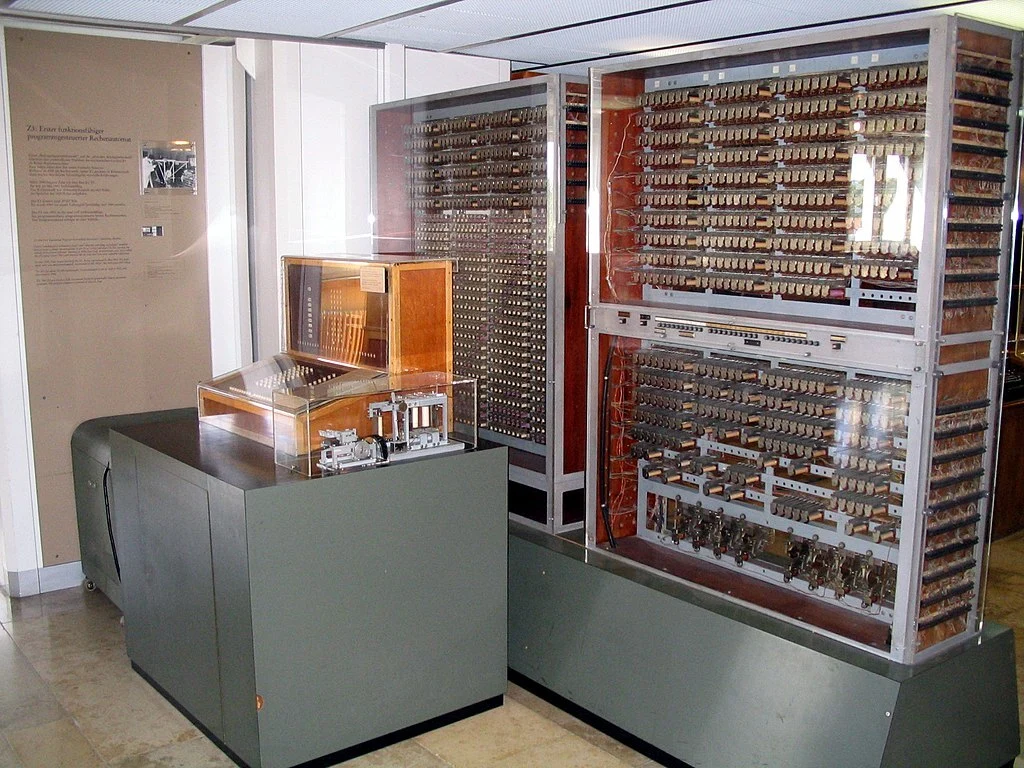

Konrad Zuse constructs the Z3, the world's first programmable general-purpose digital computer.

1943

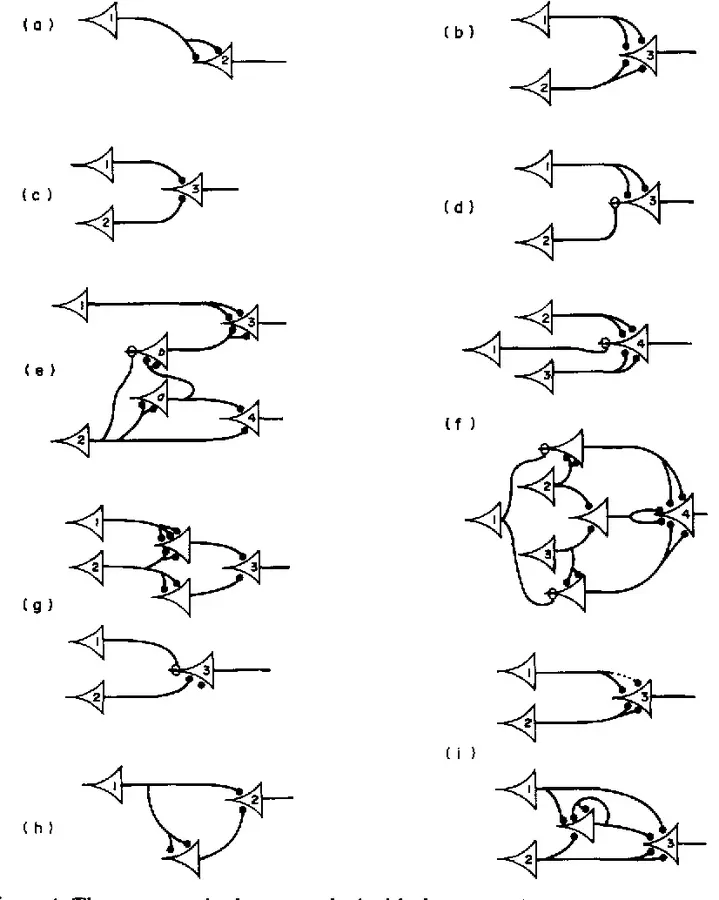

Neurophysiologist Warren McCulloch and logician Walter Pitts publish A logical calculus of the ideas immanent in nervous activity, in which they propose simple models of neurons and show that networks of these models could perform all possible computational operations.

1943

Arturo Rosenblueth, Norbert Wiener, and Julian Bigelow coin the term Cybernetics as the name of the field related to feedback and control systems.

1945

John Von Neumann's First Draft of a Report on the EDVAC gets distributed, making it the first published description of a logical design of a computer where the program is stored alongside its data. Others came up with the "stored-program" concept as well, but Von Neumann gets attributed due to this publication.

1948

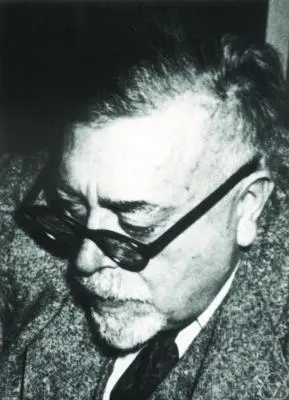

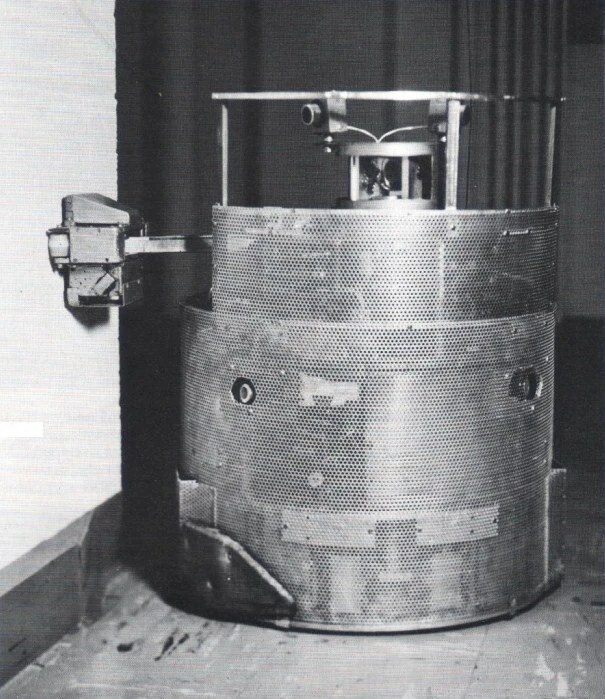

W. Grey Walter introduces "Machina Speculatrix", machines that behave like primitive creatures. Machina Speculatrix are an early example of autonomous robots that are capable of basic sensory-motor interactions. These robots are constructed with electronic components and exhibit behaviors reminiscent of biological organisms.

1948

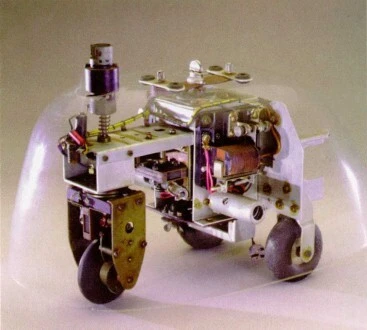

William Ross Ashby builds the homeostat, a device capable of adapting itself to its environment. The homeostat consists of four magnets that are kept in equilibrum through feedback systems.

1948

The Hixon Symposium takes place on September 20, 1948. It is an interdisciplinary conference on how the nervous system controls behavior and how the brain might be compared to a computer.

John von Neumann gives a talk comparing the functions of genes to self-reproducing automata. In another talk, psychologist Karl Lashley lays the foundation of cognitive science.

1950

Alan Turing publishes the article Computing Machinery and Intelligence, in which he devises a test that can show if a machine can think, the famous "Turing test". It also discusses a list of arguments people might use against the possibility of achieving intelligent computers.

It is in this time period, the 1950s, that research into Artificial Intelligence starts taking off, with researchers exploring various pathways that could lead to mechanized intelligence.

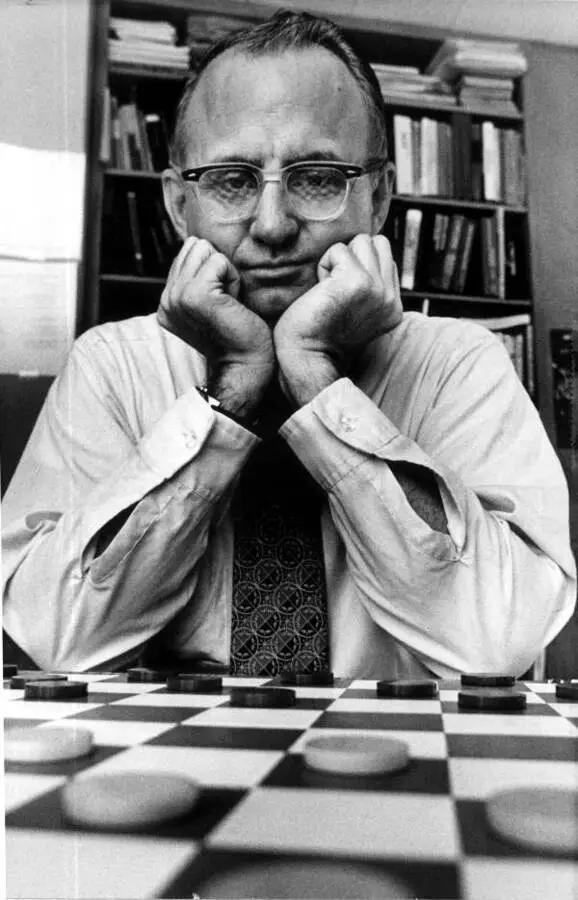

1952-1965

In 1952, Arthur Samuel writes a program that is able to play checkers at a reasonable speed. His interests lay in exploring how to get the program to learn. A first version that incorporates "machine learning" is completed in 1955. In 1962, Samuel's program beats the blind checkers master Robert Nealey.

Samuel experimented with a primitive form of temporal difference learning and rote learning. He also let his program learn from the recorded games of master checkers players.

1954

The process of evolution serves as an inspiration for AI, especially random generation and selective survival. Viral geneticist Nils Aall Barricelli (1912-1993) attempts to simulate evolution on a computer by having numbers migrating and reproducing in a grid.

1956

John McCarthy organizes the Summer Research Project on Artificial Intelligence. The workshop marks the official beginning of serious research and development in artificial intelligence.

1956

Allen Newell, Herbert A. Simon, and Cliff Shaw program a first version of Logic Theorist (LT). LT introduces some concepts that are central to AI:

- Reasoning as a search problem

- Using heuristics to narrow the search space

- Symbolic list processing

LT is capable of proving 38 of the first 52 theorems in Principia Mathematica, even finding new and shorter proofs for some of them.

Using the experience gained from LT, the same team develops the General Problem Solver (GPS) a few years later. The goal of GPS was to encode human problem-solving behavior. For example, GPS was able to split a problem into sub-problems and solve these recursively.

1957

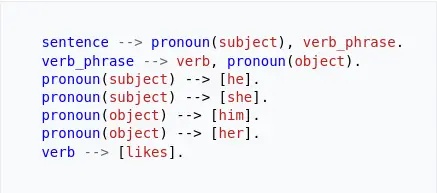

Noam Chomsky publishes Syntactic Structures, an import work in the field of linguistics and Natural Language Processing. Chomsky discusses the independence of syntax from semantics. Take, for example, the sentence "Colorless green ideas sleep furiously". While syntactically correct, its meaning is completely nonsensical.

Chomsky also proposes a set of grammatical rules that can generate syntactically correct sentences in a language.

1950s

Herbert A. Simon and Edward Feigenbaum creates EPAM (Elementary Perceiver and Memorizer), a system that is able to learn nonsense syllables that are used in psychological expirements. It is the first program to make use of decision trees.

1958

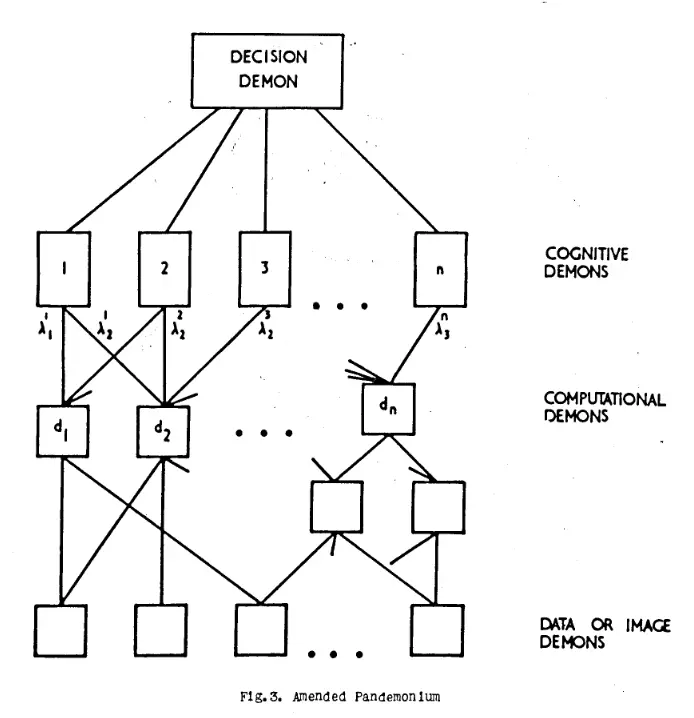

While working on image recognition, Oliver Selfridge introduces a system for learning he calls Pandemonium. He describes components, called demons, that either operate on low-level input functions or higher-level cognitive functions.

The demons work hierarchically: at the lowest level, each demon operates on a certain input aspect, e.g. looking for a line in an image. These demons "shout" at the higher level demons, shouting louder the more certain they are of their input. This continues up to the highest level, where a single demon finally makes a decision.

Around the same time, Psychologist Frank Rosenblatt introduces perceptrons, prototype neural networks that follow a similar architecture as Pandemonium.

1958

Richard Friedberg conducts experiments with evolving programs. His work involves generating, mutating, and testing computer programs to explore the concept of program evolution for specific tasks. Unfortunately, his attempts were not very successful.

1950s

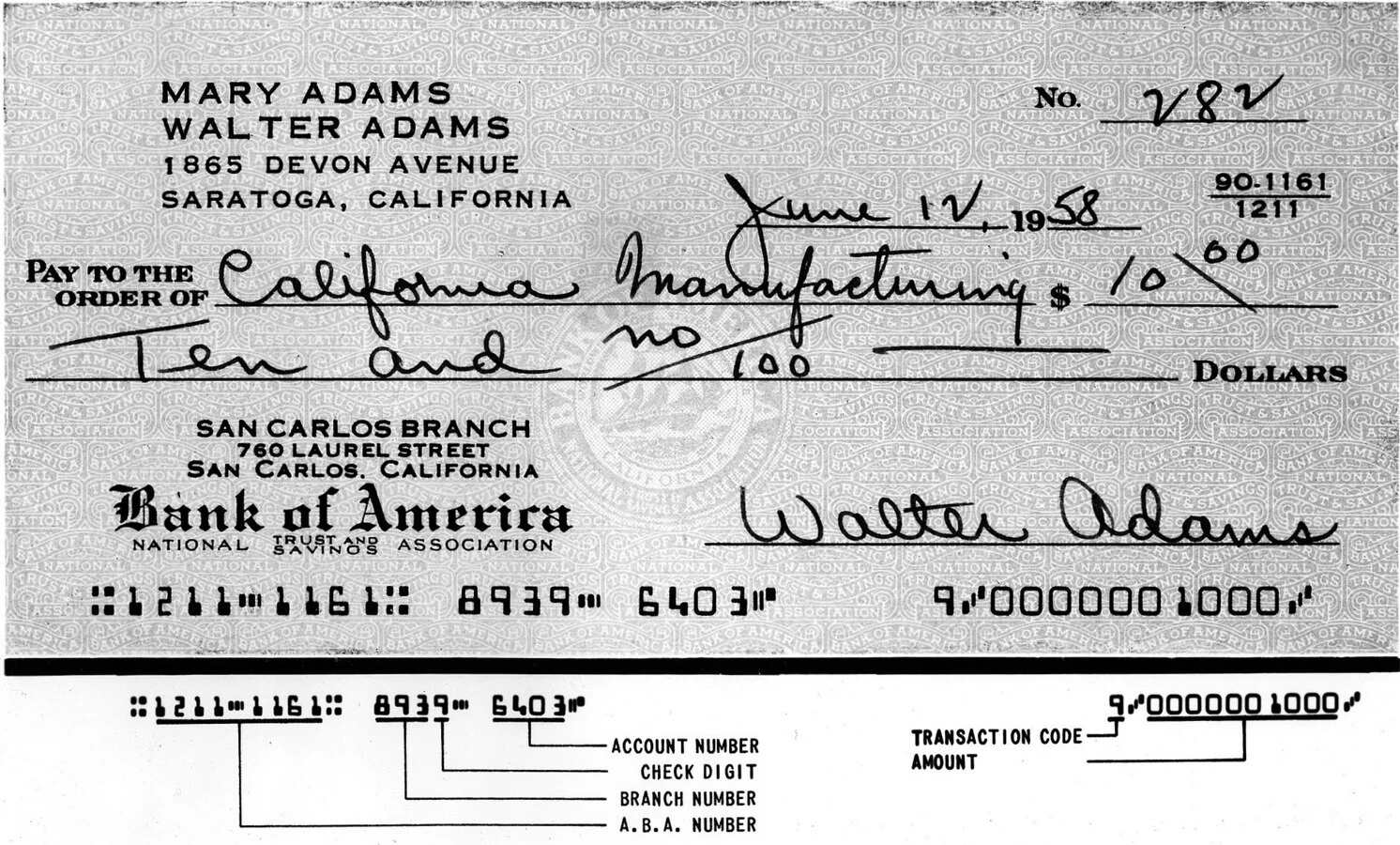

The field of computer vision initially concentrates on the recognition of alphanumeric characters on documents. In the 1950s, magnetic ink character recognition (MICR) is successfully used to read characters at the bottom of checks. Most of the initial recognition methods depend on matching characters with predefined templates.

In 1955, Gerald P. Dinneen presents filtering methods to thicken lines and find edges in images. Oliver Selfridge describes how features can be extracted from these cleaned-up images. Selfridge and Ulrich Neisser combine these methods in 1960 to create a feature-detection method that uses a learning process during which training images are analyzed.

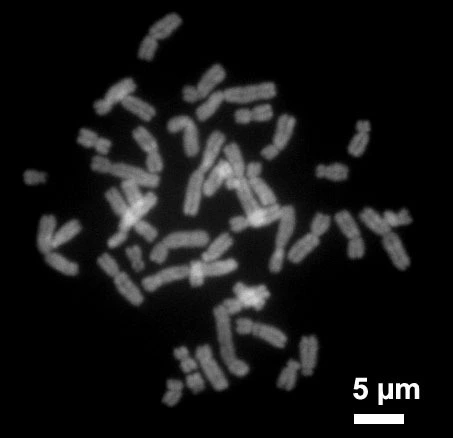

1960

Inspired by the principles of natural selection and evolution, John Holland pioneers the development of genetic algorithms. These algorithms involve the generation, mutation, and selection of candidate solutions to optimization and search problems. Candidate solutions were encoded as strings of 1's and 0's, which he called chromosomes.

1960

John McCarthy publishes the design of LISP, which stands for list processing. LISP programs themselves are lists, making it possible for programs to easily manipulate other programs, or have subprograms embedded in them. Its ease of use and expressive power quickly makes it the favored language among Artificial Intelligence researchers.

1960

The world's first Symposium on Bionics takes place. At this time, "bionics" is used to describe the field that learns lessons from nature to apply it to technology.

1960s

Researchers at the Johns Hopkins University build "The Beast", a mobile robot that equipped with photocells and sonar. It is able to "survive" on its own by wandering the white halls of the university, and plugging itself in into black wall outlets to recharge.

1960s

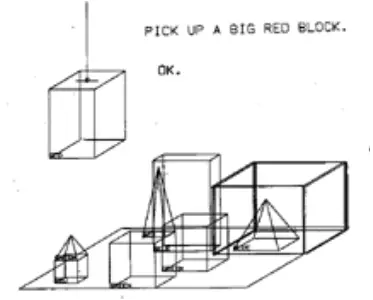

Using Lisp, Terry Winograd develops SHRDLU, an NLP achievement that causes great excitement. SHRDLU can carry on a dialog about a microworld, in this case a world consisting of toy blocks and a gripper that can move these blocks.

SHRDLU understands and executes commands written in ordinary English, it is context-aware, and it asks the user for more clarification for commands it does not understand.

Similar systems rise up, such as LUNAR, which specialises in scientific study of moon rocks, or GUS, which is able to plan commercial flights.

1961

The Unimate is the first industrial robot. It works on a General Motors assembly line, transporting and welding die castings. The picture shows Unimate pouring a cup of coffee.

Around this time, researchers begin experimenting with adding sensor input to robot arms. In 1961, Heinrich A. Ernst develops a mechanical "hand" that uses tactile sensors to guide it. In 1971, the Stanford University Artificial Intelligence Laboratory develops a system that is able to solve the "Instant Insanity" puzzle using a camera. A video of the robot in action can be seen here.

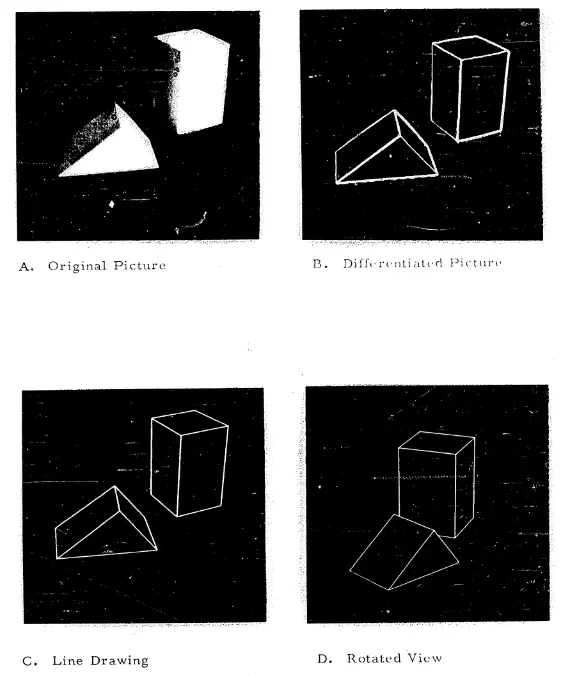

1963

Lawrence G. Roberts implements a program that can identify objects and determine their position and orientation in 3D space. An important part of the program is its ability to detect edges. This is done using a technique called the Roberts Cross, the first example of a gradient operator.

More advanced filters were developed in the following years. For example, the Sobel Operator was developed in 1968 by Irwin Sobel, or the Laplacian of Gaussian by David Marr and Ellen Hildreth in 1980.

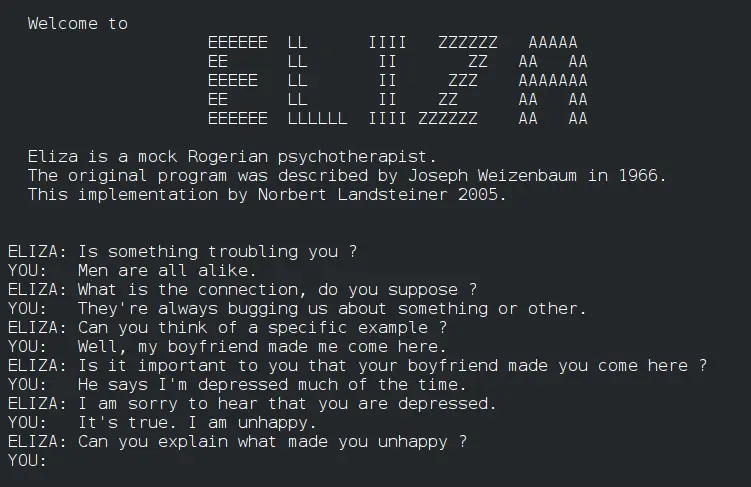

1964

Joseph Weizenbaum works on ELIZA from 1964 to 1967. ELIZA is an NLP program that is able to have a conversation with a human, even though the program has no understanding of what is being said. It is one of the first chatbots, and thus one of the first programs that can attempt the Turing Test.

In the same time period, several other attempts at conversational agents were being developed. Some examples include:

- Bert Green's BASEBALL. The program was able to answer basic questions about baseball based on a database of baseball matches.

- Robert Lindsay's SAD SAM (Sentence Appraiser and Diagrammer and Semantic Analyzing Machine). SAD SAM was able to answer questions about a family tree, after being given information about the family tree in natural language form.

- Daniel G. Bobrow's STUDENT, which was able to solve algebra problems formulated using a basic subset of English.

1965

Lofti Zadeh introduces the term Fuzzy Logic, a form of logic where the truth value of a variable can be any real number between 0 and 1.

Since then, fuzzy logic has seen some adoption in AI. One famous example is Maytag Company's 1995 "IntelliSense" dishwasher, an intelligent dishwasher that uses fuzzy logic to determine optimal wash cycles. Fuzzy logic also has overlap with neural networks.

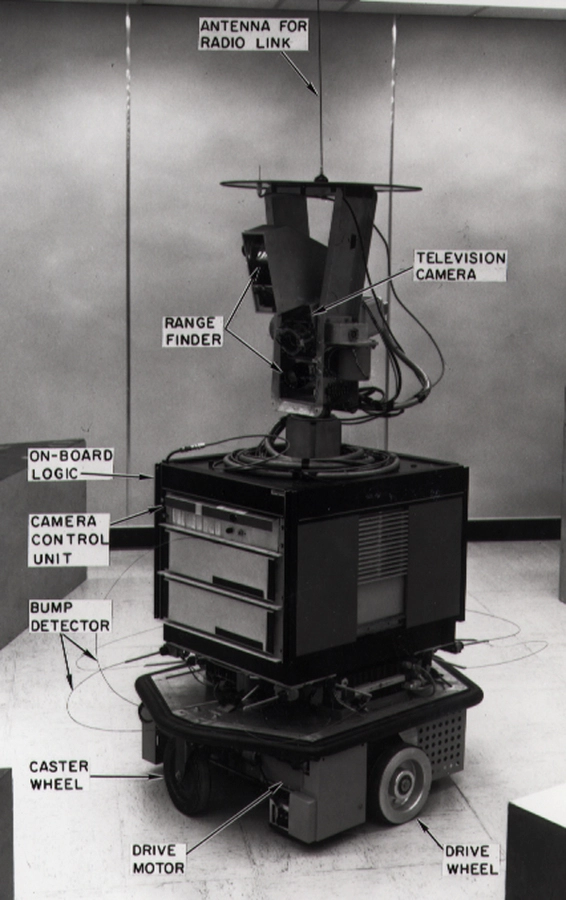

1966

SRI researchers combine advances in robotics, computer vision, and NLP to develop Shakey, the first mobile robot able to reason about its own actions, plan ahead, and learn. It uses vision, range-finding, and touch sensors.

The need for an efficient search method for Shakey led directly to the development of the A* heuristic search method.

1966

Early research efforts in NLP are concentrated on using computers to perform automatic translation. In 1954, IBM demonstrates a program that is able to translate Russian sentences into English. It causes a great deal of excitement and an increase in funding, but subsequent research in the field is disappointing.

In 1964 the Automatic Language Processing Advisory Committee (ALPAC) is established, with the goal to inform the US government about progress in machine translation. In 1965, they release a skeptical report, stating that "... there is no immediate or predictable prospect of useful machine translation". It causes a dramatic drop in funding and marks the beginning of the first AI winter.

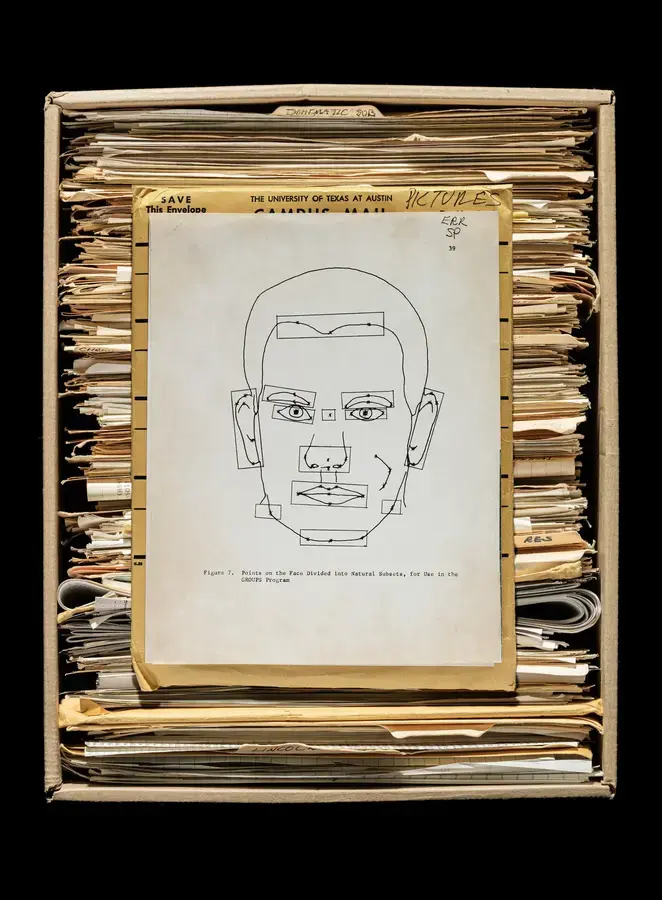

1967

Woodrow W. Bledsoe works on techniques for facial recognition, together with Charles Bisson and Helen Chan.

Their approach involves human-AI interaction where a human extracts the coordinates of a set of features from a photograph, which the computer then uses to calculate distances and find similarities.

1967

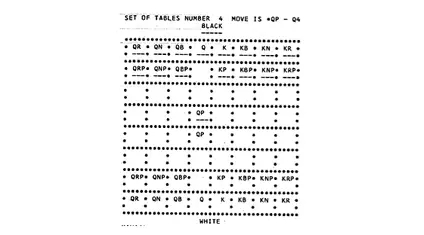

Kotok-McCarthy is the first computer program that is able to play chess convincingly. In 1965, it was challenged by the Russian ITEP to play a match against their own chess program, making it the first computer versus computer chess game.

Chess programs continue to improve the following years. In a famous 1967 match, the program Mac Hack beats Hubert Dreyfus, who just two years earlier stated that "no chess program could play even amateur chess".

1960s

The 1960s are a period of enthusiasm and optimism for the future of AI. Herbert Simon predicts in 1957 that, within 10 years, a computer will be the world's chess champion. Marvin Minsky states that "in 30 years we should have machines whose intelligence is comparable to man's", in a 1968 press release for the movie 2011, A Space Odyssey.

1970s

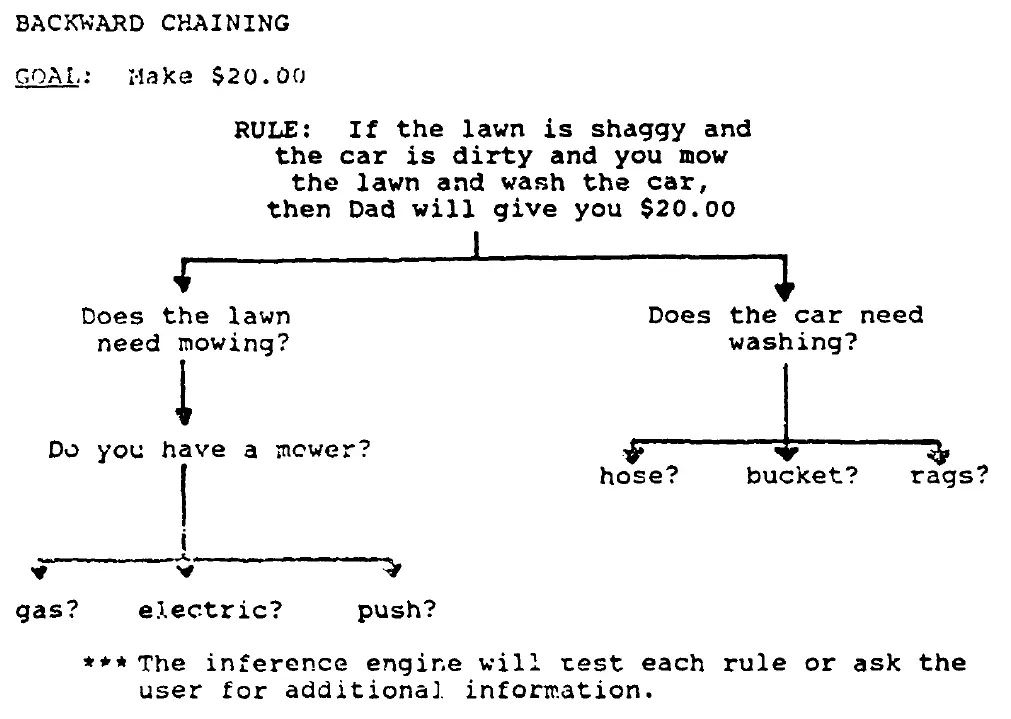

Over the course of five or six years, researches at Stanford University develop MYCIN, an expert system able to assist in medical assessments by asking a series of questions. MYCIN performs slightly better than a human professional.

The progam is based on a knowledge base built by encoding knowledge of experts as probabilistic rules. An inference engine uses this knowledge base to decide which questions to ask and to determine the result. Separating the knowledge base and the inference engine makes it possible to adapt MYCIN to other domains with few adjustments, leading to a large growth in expert systems going into the 1980s.

1971

DARPA funds the 5-year Speech Understanding Research (SUR) program in October 1971. Among other projects, it leads to the creation of the speech recognition program DRAGON. This is the first AI program to employ hidden Markov models (HMMs). DRAGON's spinoff company is still a leading player on the speech-recognition market.

Other notable projects in the SUR program include HARPY and HEARSAY-II.

The results of the SUR program were found to be insufficient to warrant extra funding.

1972

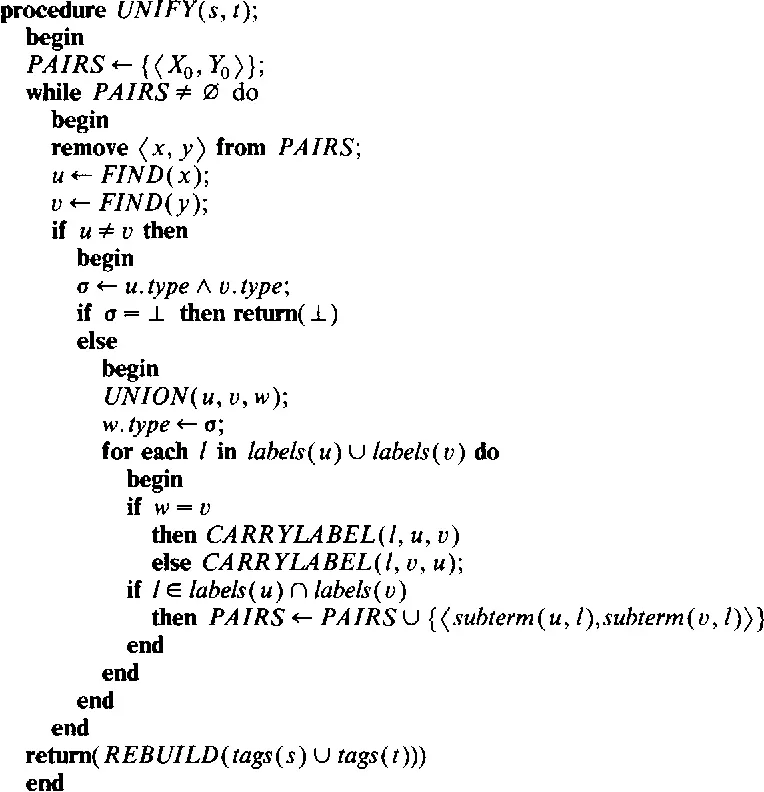

Robert A. Kowalski develops a more efficient algorithm to perform resolution, called "SL-resolution". Based on his work, Alain Colmerauer and Philipe Roussel create PROLOG (PROgrammation en LOGique), one of the first logic programming languages.

1979

Fernando Pereira and David H.D. Warren create CHAT-80, a chat system that can answer questions posed in English about a database of geographical facts. These questions can be very complex, for example:

- What is the ocean that borders African countries and that borders Asian countries?

- What is the total area of countries south of the Equatot and not in Australasia?

The system is based on Definite Clause Grammars (DCGs), which are processed using Prolog. A modernized version of the codebase is available on Github.

1980s

Classical AI often relies on search trees, which suffer from "Combinatorial Explosion". Even with a small branching factor, searching an entire tree quickly becomes intractable. Combined with conclusions from complexity theory, people are starting to doubt AI's ability to handle more than just "toy problems".

Furthermore, the early 1980s are a period of unrealistically high expectations for AI. It proves impossible to fulfill these expectations, leading to an increase in pessimism and criticism, and ultimately research funds being cut. It is the second AI Winter.

1982

Japan launches a project to develop "Fifth Generation Computer Systems" (FGCS), advanced computer systems with many parallel processors. Its ultimate goal is to produce computers that can perform AI-style inference on large knowledge bases. For this reason, PROLOG is chosen as the base language for the machine, although it is later replaced with GHC, a logic programming language more adapted to concurrency.

The rest of the world responds by creating their own research projects, such as The Alvey Programme of the British, ESPRIT in Europe, and the Strategic Computer Initiative in the United States, leading to a surge in AI funding.

1984

Douglas Lenat starts the Cyc project. It attempts to codify all common-sense knowledge of how the world works, which can then serve as the basis for other AI projects.

As of 2017, it contains about 24,5 million rules, which took about 1000 person-years to construct.

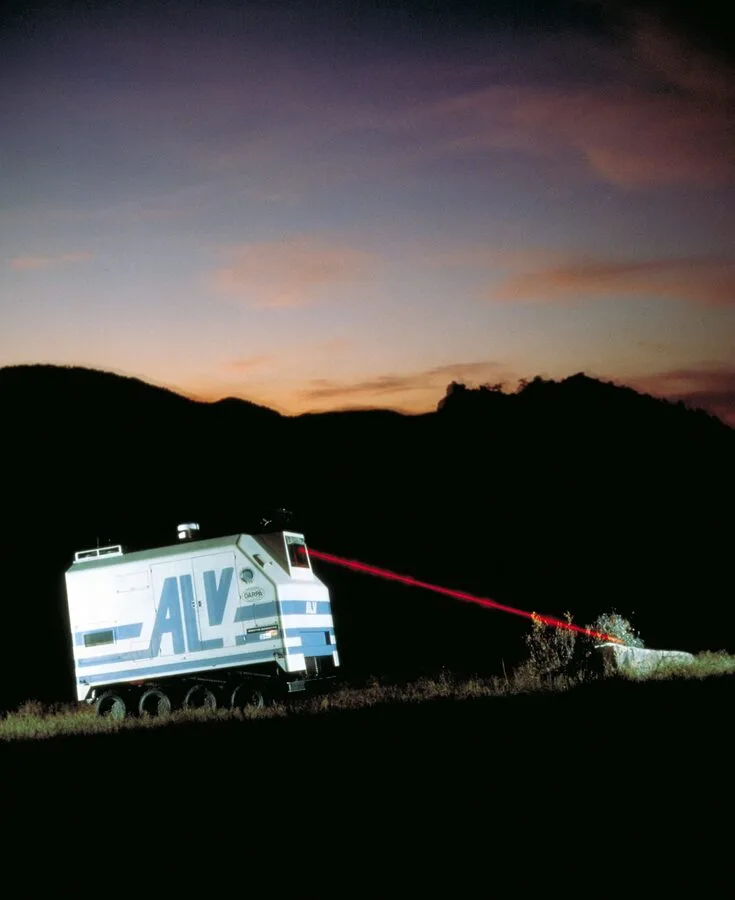

1984

With funding from the SCI, Martin Marietta (later Lockheed Martin) begins the Autonomous Land Vehicle (ALV) project. The goal of the project was to achieve speeds of up to 90 km/h on roads with other vehicles by 1991.

The ALV program is cancelled in 1988 due to insufficient progress and limited computing power, but research in autonomous vehicles continues and improves year by year.

1984

NASA launches Deep Space 1, equipped with the "Remote Agent" (RA) technology. RA serves as an intermediate between operators on earth and sensors on board of the spacecraft. Operators can use RA to instruct which goals they want it to achieve, as opposed to using detailed sequences of commands.

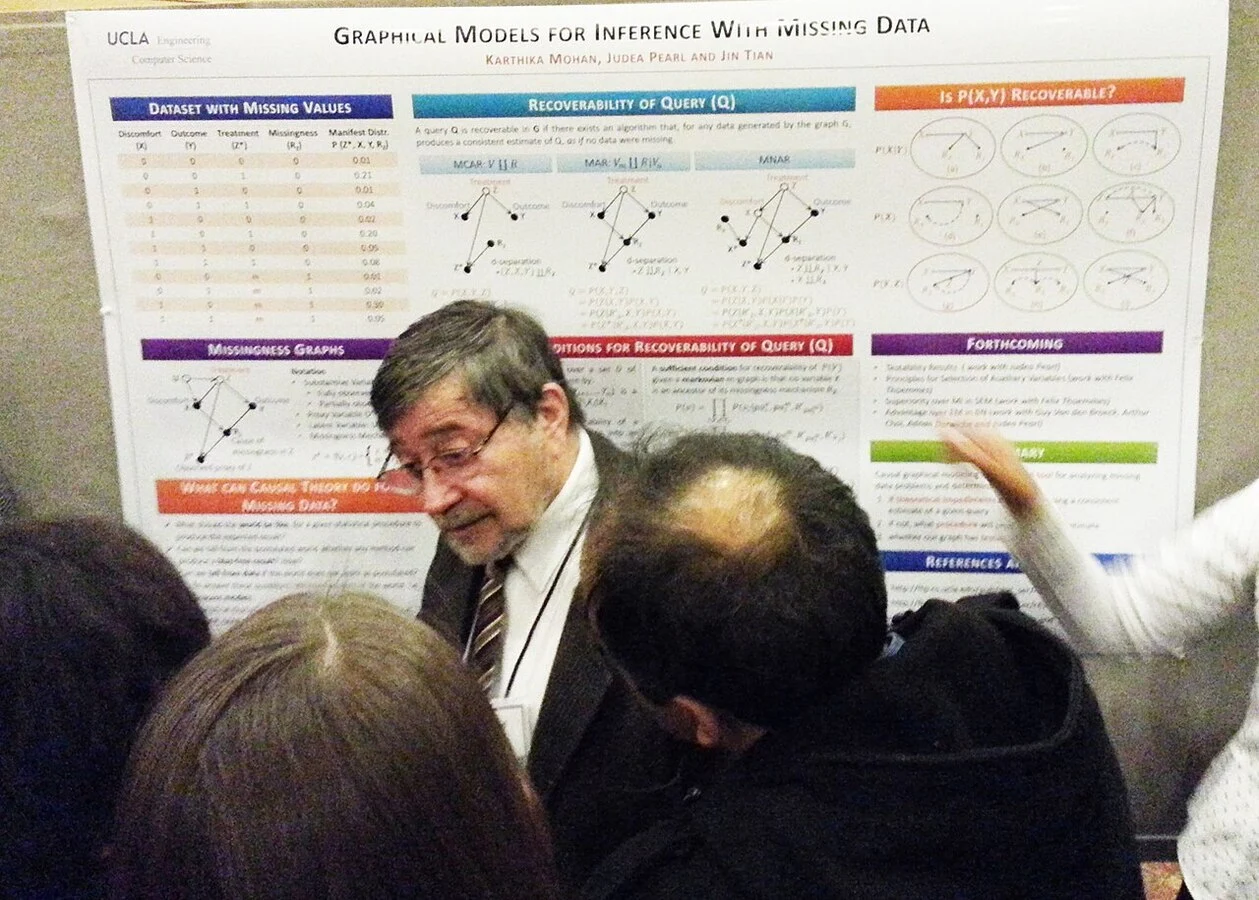

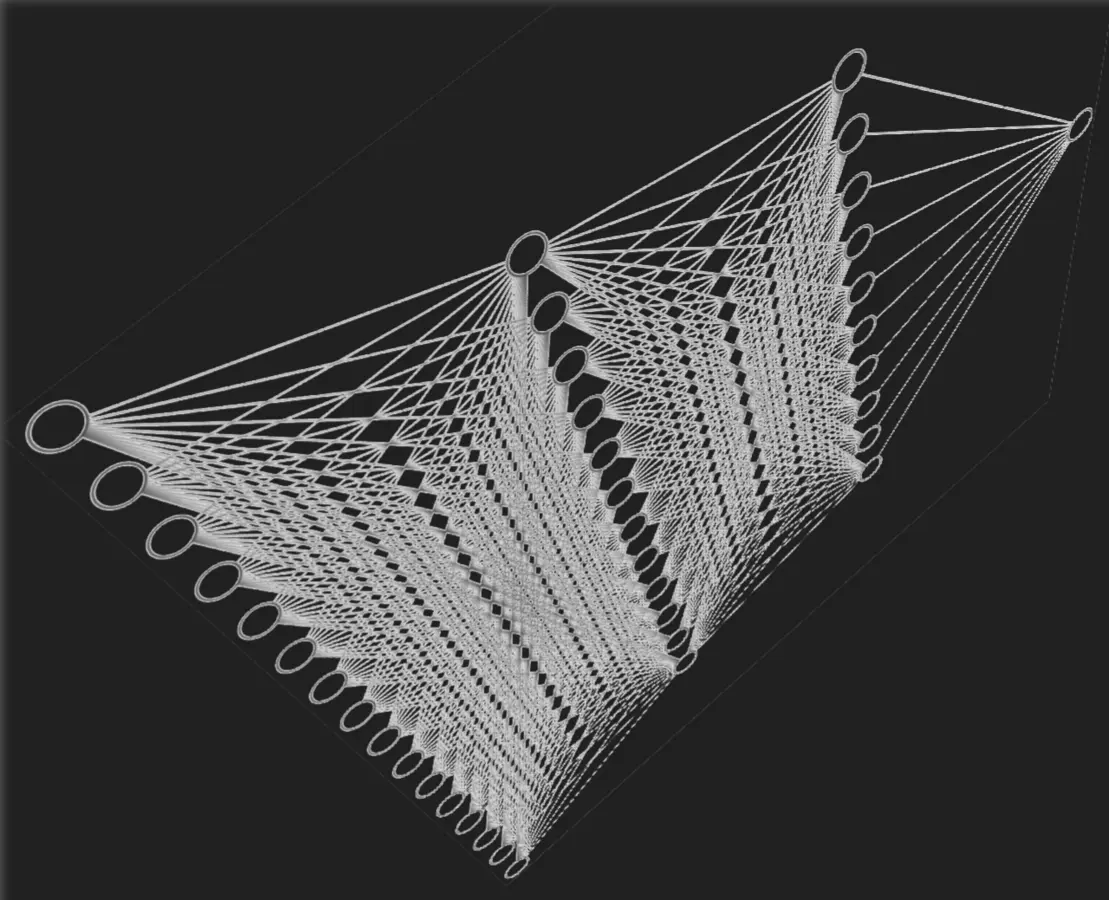

1986

Judea Pearl coins the term Bayesian network. Bayesian networks are able to combat the combinatorial explosion when dealing with probabilities.

1980s

The 1980s and early 1990s see a lot of advances in machine learning, too many to list separately. Some examples include:

- David Rumelhart, Geoffrey E. Hinton, and Ronald J. Williams formalize back propagation.

- Terrence J. Sejnowski and Charles Rosenberg create NETTalk, a neural network that learned to talk.

- Advances in reinforcement learning, such as Q-learning.

- Vladimir Vapnik et al. develop Support Vector Machines, one of the most robust prediction methods.

1994

Checkers program Chinook is declared Man-Machine World Champion after six drawn games against Marion Tinsley, a famously unmatched checkers champion.

1996

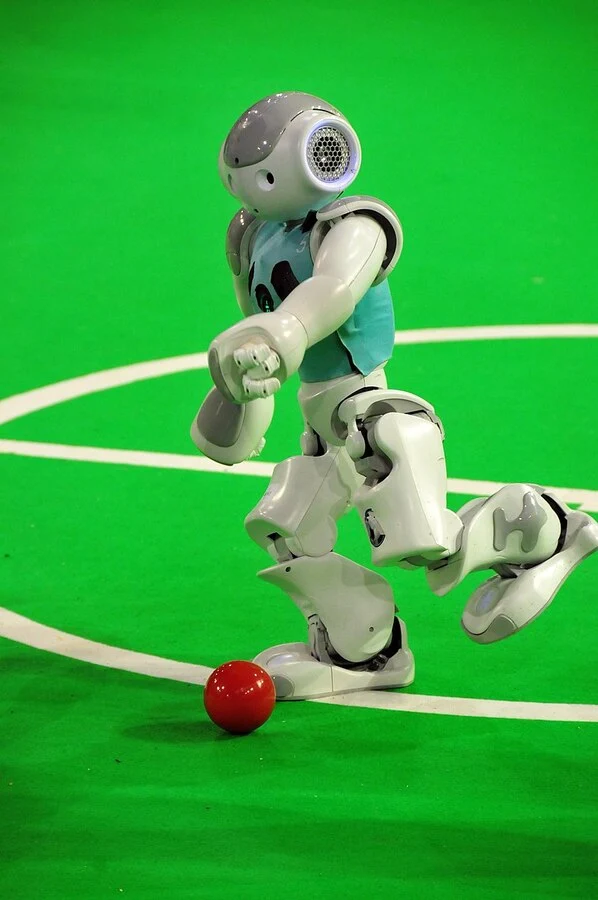

The annual robotics competition RoboCup is founded. The ultimate goal of the project is to assemble a team of humanoid robots that can win a game of soccer against the winner of the most recent World Cup.

1997

Deep Blue beats world chess champion Garry Kasparov, making it the first defeat of a world chess champion by a computer.

2003

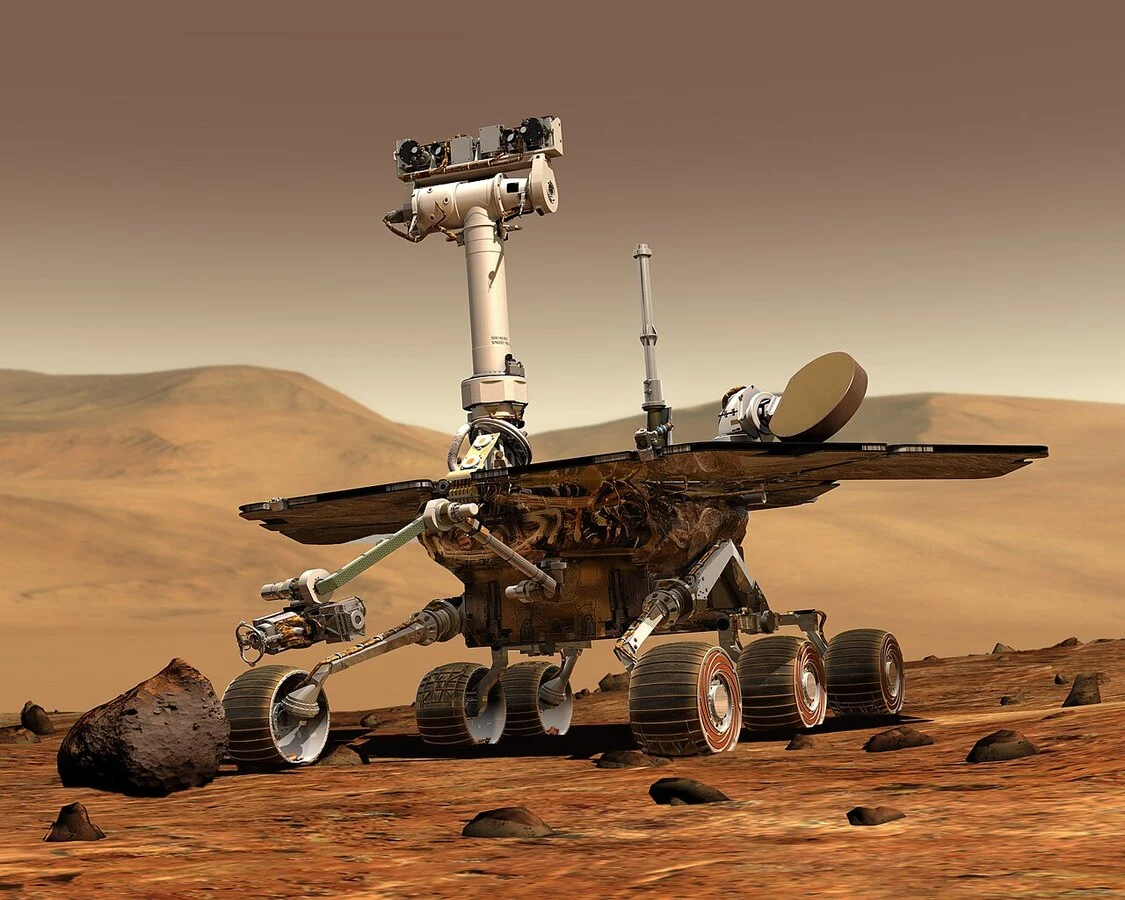

NASA launches Spirit and Opportunity, two robotic rovers that autonomously explore the surface of Mars. Opportunity was active until 2018.

2004

DARPA hosts its first Grand Challenge, a competition with a cash prize of $1,000,000 for the team that can complete an extensive track using a driverless vehicle. Out of the 106 participating teams, none are able to finish the parcour.

A second Grand Challenge is held the following year, with double the price money. This time, five vehicles make it to the finish line.

Grand Challenges continue to be held, with different goals, such as robotics and subterranean navigating.

2005

Boston Dynamics, funded by DARPA, develops BigDog a dynamically stable quadruped military robot designed to serve as a robotic pack mule. The project was discontinued in 2015 because it was deemed to loud for use in combat.

Boston Dynamics remains a leading company for highly mobile robots to this day.

2007

Dharmendra Modha and his team publish the paper Anatomy of a cortical simulator, in which they constructed a computer simulation of a rat-scale model of the cortex. It can be considered a bottom-up approach to human-level intelligence.

Modha leads the SyNAPSE project, a DARPA program with the goal to develop a new kind of computer that mimics the mammalian brain.

2007

Jonathan Schaeffer and his team announce that they have solved checkers. A solved game is a game whose outcome can be predicted from every position, assuming perfect play from both sides. For checkers, perfect play always leads to a draw.

2016

AlphaGo, a computer program that plays Go, beats the world's second-best Go player Lee Sedol in 4 games out of 5. Up until this point, Go was considered the next AI challenge in board games, after checkers and chess. A documentary of the events is available for free online.

As opposed to DeepBlue's chess victory in 1997, which was achieved using brute force search, AlphaGo relied on neural networks and reinforcement learning as well.

2022

Language models have been a topic of research since the 1980s. Large Language Models (LLMs) are the next step in this research, applying language models at a large scale - both in amount of training data as in number of parameters. They are able to achieve good understanding and generation of natural language.

OpenAI's chatbot ChatGPT, based on the LLM GPT-3.5, releases in November 2022. It is an enormous success: with over 100 million users over the course of a few months, it is the fastest-growing consumer software application to date.

2023

The AI Safety Summit is held in the United Kingdom. It is the first global summit on Artificial Intelligence. Its goal is to achieve global agreements on the challenges and risks of AI.

2045

Vernor Vinge describes the singularity event, where an ever-increasing rate of progress in computing and explosive technological advancement may some day cause large computer networks to "wake up" as a superhuman entity. He estimates it to happen in 2030.

AI researcher Ray Kurzweil popularizes this concept in his book The Singularity is Near, where he places the event in about 2045.